Capturing Website Data for Offline Analysis: A Step-by-Step Guide

Explore the intricacies of capturing website data for offline analysis. Discover tools and techniques that enable efficient collection and examination of web data.

Table of Contents

- Introduction

- Why Capture Website Data?

- Tools for Capturing Website Data

- Step-by-Step Guide

- Best Practices

- Potential Issues and Solutions

- Conclusion

Introduction

In an era driven by data, the ability to capture and analyze website data offline has become a crucial skill. This guide offers comprehensive insights into methods and tools for downloading web data, ensuring that users can fully utilize this information for further analysis and strategic insights.

Why Capture Website Data?

There are several compelling reasons to capture website data for offline use. Understanding these can help you determine the value it brings to your operations.

Benefits of Offline Analysis

Offline analysis allows users to evaluate web content without constant internet connectivity, making it accessible anytime. Additionally, it provides the opportunity to perform a thorough investigation of web trends and patterns, enhancing business strategies and operations.

Tools for Capturing Website Data

To effectively capture website data, selecting the right tools is critical. Below are popular tools that ease the data collection process.

Web Scrapers

Web scrapers are programs designed to extract data from websites. They range from simple tools capturing data from one or two sources to complex setups that gather information from multiple sources automatically.

Browser Extensions

Another effective method involves using browser extensions. These tools integrate with your web browser, making it easy to download data directly from the sites you visit. They can be installed quickly, without needing advanced programming knowledge.

Step-by-Step Guide

Here’s a practical guide to capturing website data in a structured manner.

Selecting Appropriate Tools

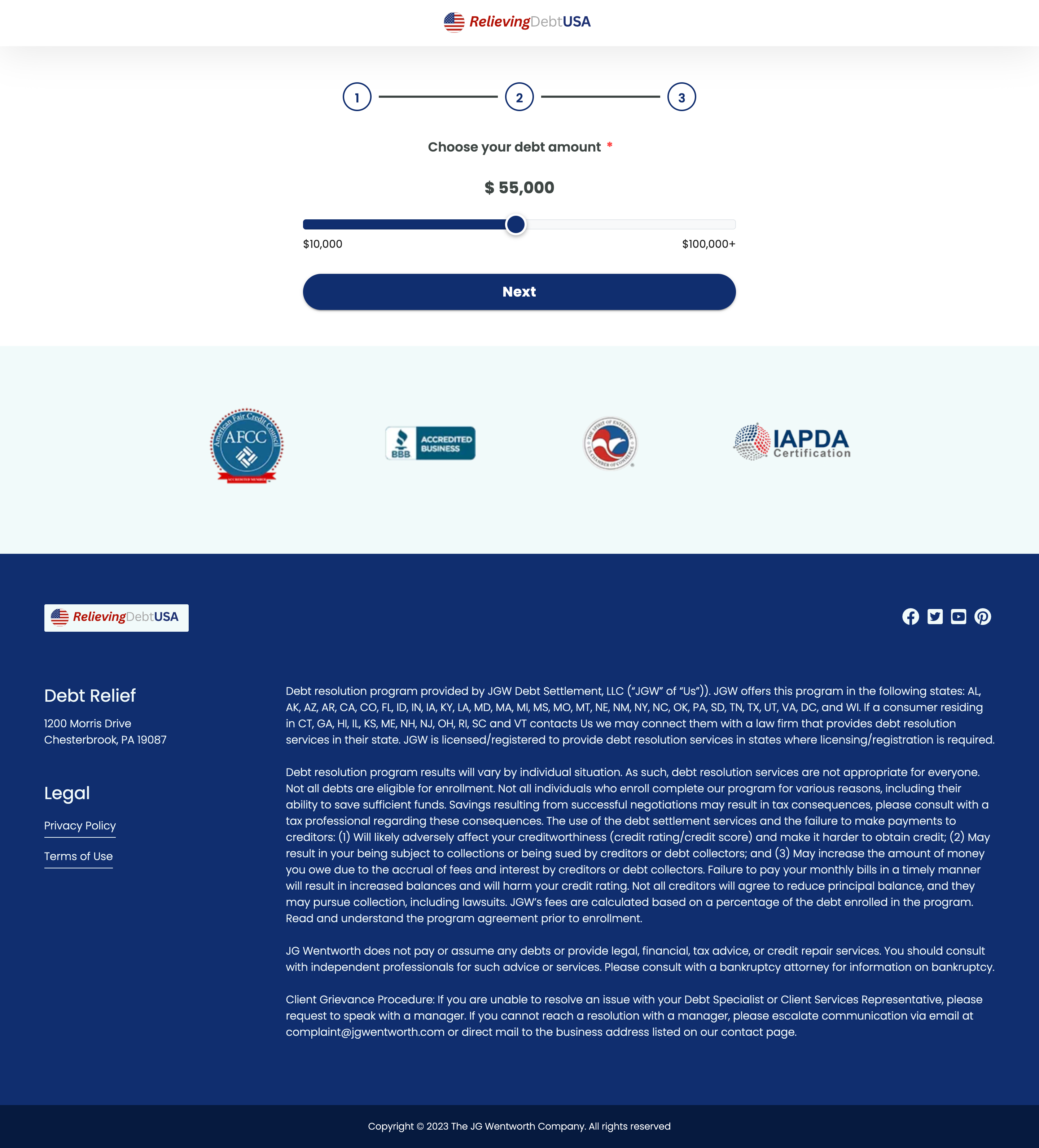

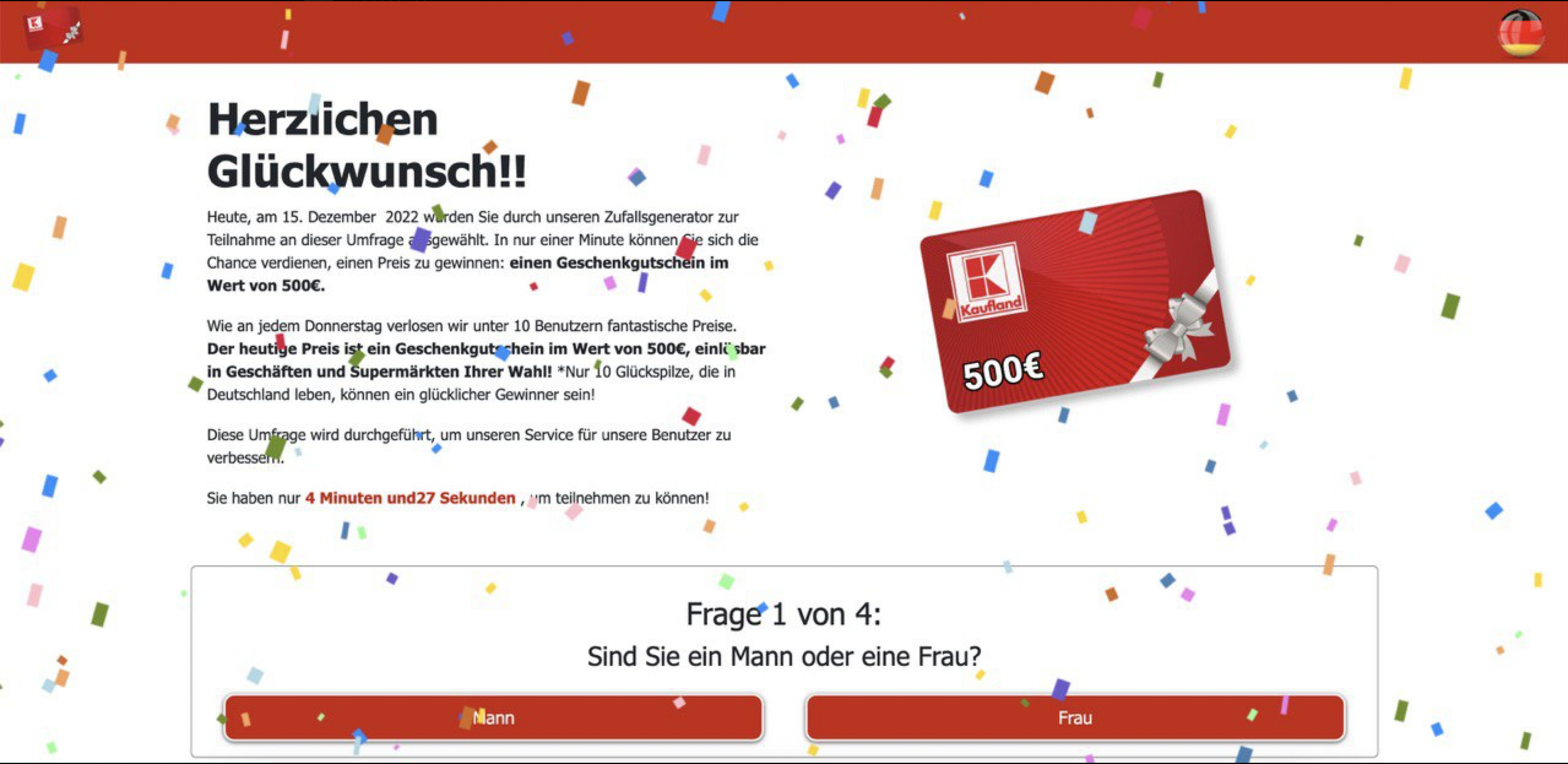

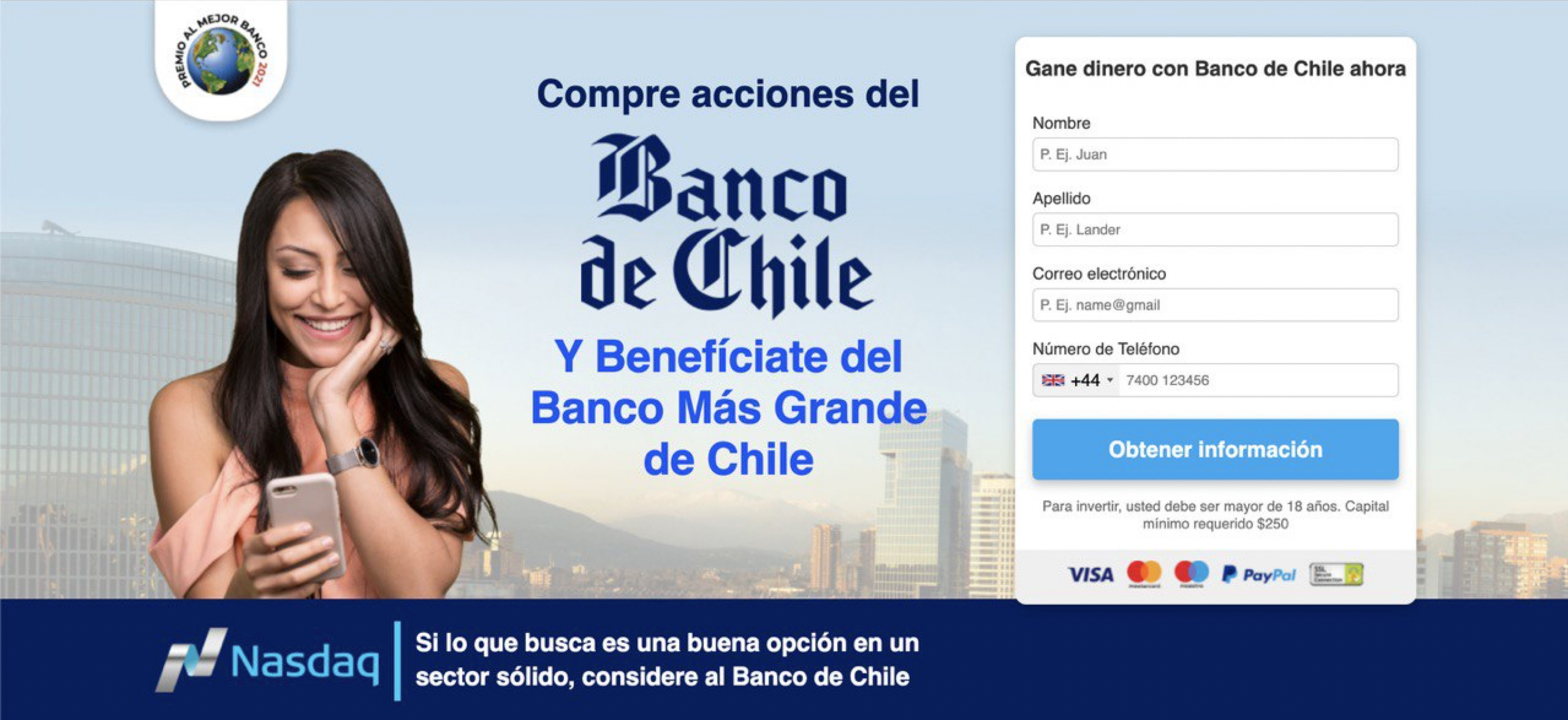

Begin by identifying the best tools for your specific needs. Factors to consider include the complexity of data, volume, and the level of manual intervention required. Excellent options include tools like Landing Page Ripper, which are designed to effectively scrape and rip landing page content.

Capturing the Data

Once the tool is selected, configure your settings to target the specific website data you need. Most tools allow you to specify URLs, data types, and frequency of data capture. This helps in organizing data collection and ensures that only relevant information is gathered.

Saving Data Efficiently

Collected data should be saved in formats that integrate smoothly with your analysis tools. Common options include CSV, JSON, and XML formats, compatible with a variety of software applications used for data analysis and reporting.

Best Practices

Ensure compliance with legal requirements and respect for privacy settings. Using tools ethically and responsibly not only avoids legal repercussions but also ensures the integrity and reliability of the data collected for analysis.

Potential Issues and Solutions

While capturing website data, certain challenges such as dynamic content loading, captchas, and complex data structures might be encountered. Solutions include using advanced scraping tools, implementing proxies, and utilizing APIs where available.

Conclusion

Capturing website data for offline analysis offers significant benefits for businesses and researchers alike. By choosing the right tools and methods, users can efficiently gather and analyze data, deriving actionable insights from it. Consider using a landing page ripper tool to streamline the data capture process and unlock the potential of web analytics.